Table of contents

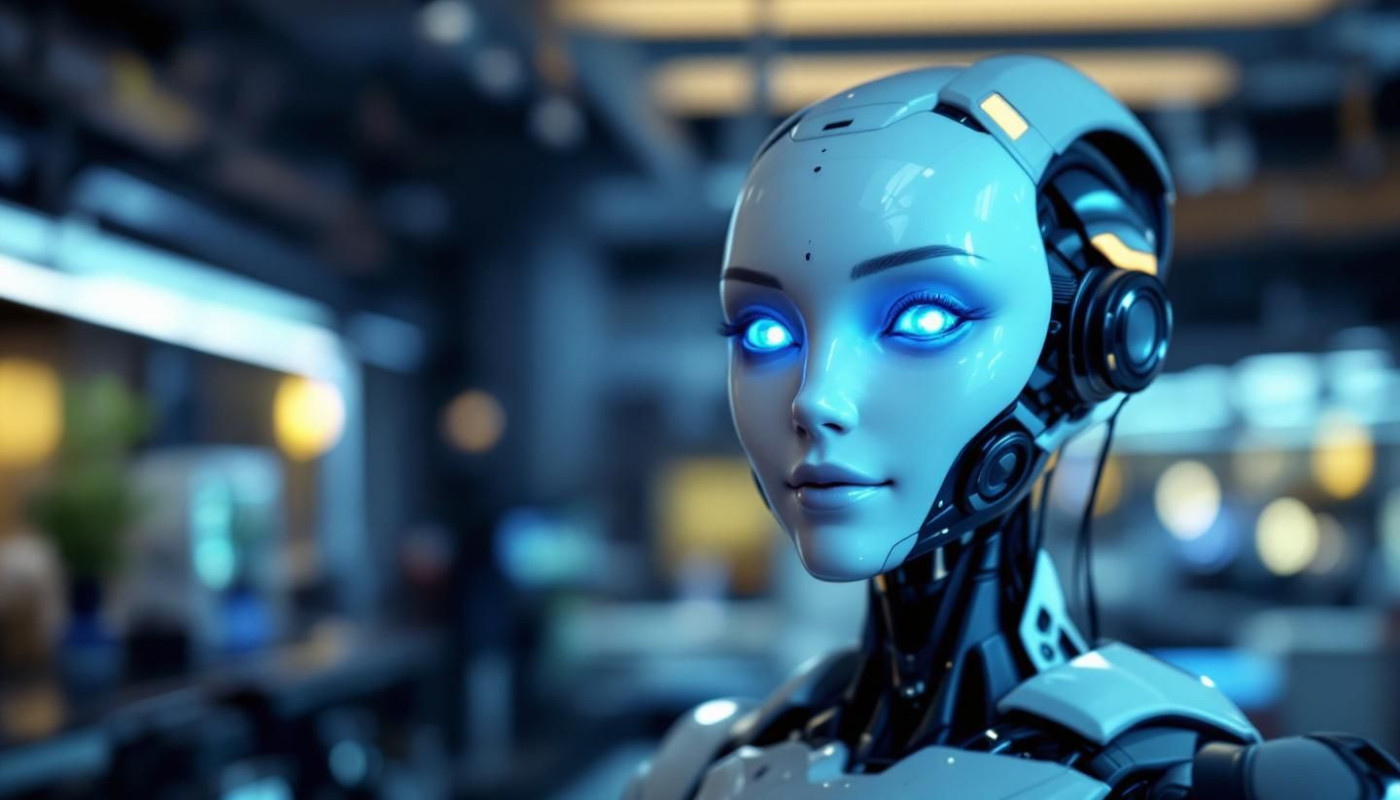

In the digital age, the rise of artificial intelligence has ushered in a new era of content creation, particularly in the visual realm. This burgeoning technology presents unprecedented opportunities for innovation, but also raises significant ethical considerations that warrant careful scrutiny. As the potential for AI-generated visual content continues to expand, it becomes imperative to explore the moral implications of its use. Delve into this thought-provoking topic to understand the delicate balance between creative freedom and ethical responsibility in the age of AI.

The Ethics of AI Authorship

Navigating the ethical challenges of AI authorship in the realm of visual content creation requires a nuanced understanding of both legal precedents and technological capabilities. At the heart of this dilemma lies the question of originality: can AI-generated content be considered unique when it is the product of machine learning algorithms processing vast amounts of existing human-created data? The rights of human creators, who may feel their creative labor undermined by AI systems, clash with the complex concept of attributing authorship to non-sentient entities. Intellectual property frameworks are being stretched to their limits as copyright laws struggle to keep pace with the rapid development of AI technologies. It is paramount to establish clear guidelines that balance the creative rights of individuals with the innovative potential of AI. An expert in the legal aspects of intellectual property and AI technology is best suited to navigate these uncharted waters, ensuring that the integrity of human creativity is safeguarded in an era of algorithmically generated content.

Consent and AI-generated Imagery

With the burgeoning capabilities of artificial intelligence, the generation of visual content through AI has introduced a myriad of ethical challenges, particularly concerning consent. While AI can create strikingly realistic depictions, the inclusion of a person's likeness in such content raises significant privacy rights concerns. Obtaining explicit consent is a linchpin in safeguarding personal rights and ensuring that individuals have control over how their image is used. Privacy law experts and ethicists specializing in AI and digital media are calling attention to the susceptibility of image misuse in the absence of robust consent frameworks.

Unsanctioned replication of individuals' likeness can lead to deepfake technology being used in deceptive or harmful ways, underscoring the potential for grave repercussions. Therefore, as we navigate this new frontier, it is vital to establish clear protocols that address and respect the nuances of consent, privacy, and personal rights. For those seeking guidance on generating AI visuals ethically, the web link offers seven tips for creating the best AI images or art, emphasizing the significance of operating within ethical boundaries.

Addressing Bias in AI Visual Content

AI bias is a pervasive issue in the realm of machine learning, where algorithms can inadvertently perpetuate stereotypes and reinforce societal inequalities. This is often due to the fact that training datasets, which are used to teach AI systems, can contain skewed representations of gender, race, and other sociodemographic factors. The presence of algorithmic bias is not only a reflection of past and present disparities but can also exacerbate them if left unchecked. To achieve diversity in AI, it is paramount to curate training datasets with a wide-ranging and balanced representation of human experiences. This can help in fostering fairness in the outcomes of AI-generated visual content and support the development of ethical AI systems. Strategies to minimize these biases include rigorous auditing of datasets, employing techniques for de-biasing, and adopting inclusive design principles throughout the AI development process. The guidance of a data scientist with expertise in ethical AI development or a scholar specializing in AI ethics can be invaluable in navigating these complex challenges and ensuring that AI systems serve to benefit society as a whole without discrimination.

The Societal Impact of AI Visual Content

The advent of AI-generated visual content, particularly through the use of generative adversarial network (GAN) technology, raises profound questions about its societal impact. GANs, by enabling machines to create images that are indistinguishable from those produced by humans, have the potential to significantly alter human perception. This technology's role in crafting visuals that can challenge our understanding of authenticity may not only influence individual viewpoints but also lead to broader cultural shifts. These shifts could manifest in the valuation and interpretation of human-created art, as AI-generated pieces become more prevalent and indistinguishable from traditional art forms.

Moreover, as AI becomes further ingrained in culture, it introduces a paradox where it can both enrich and distort our experience of visual media. On one hand, the abundance of AI-generated visuals can democratize art creation, allowing for a diverse and plentiful supply of images that inspire and provoke thought across different strata of society. On the other hand, this same proliferation runs the risk of media distortion, challenging the very notion of reality as these sophisticated images could be used to manipulate and mislead.

The balance between the benefits and drawbacks of AI in culture is delicate and requires vigilant consideration. It is paramount that as we navigate this new landscape, we remain aware of the consequences that AI-generated visuals can have on our collective consciousness and the artistic value we ascribe to human ingenuity. In this way, the conversation around the societal impact of AI visual content is not just an academic exercise but a necessary discourse for preserving the integrity of our cultural and visual heritage.

Transparency and Accountability in AI Content Creation

In the rapidly evolving landscape of digital media, the imperative for transparency and accountability in the creation and distribution of AI-generated visual content has never been greater. With the proliferation of sophisticated algorithms capable of producing images that are often indistinguishable from those created by humans, there arises a significant risk of misinformation and erosion of trust. It's paramount to advocate for the establishment of AI content standards that clearly distinguish between computer-generated and human-made content. Not only does this distinction uphold integrity within digital media, but it also fosters a culture of honesty and authenticity, which is fundamental to the relationship between content creators and their audience.

Content discernibility is a pivotal concern that can be addressed by implementing content attribution technology. This technology serves as a digital watermark that identifies the nature of the content's origin, providing an immediate signal to users regarding the source of what they are viewing. It is the responsibility of AI policy makers and technologists specializing in digital ethics to develop and promote these standards. By doing so, they play a crucial role in maintaining the fabric of trust that digital media relies on. Without such measures, the lines between AI-generated and human-created content could blur, potentially leading to a disconcerting future where authenticity in digital content is a rarity rather than a given.

Similar

Exploring The Ethics Of AI-Generated Companionship

How Modern Workspaces Overcome Poor Mobile Connectivity?

Exploring Creative Ways To Combine Elements For Unique Creations

Exploring The Benefits And Applications Of Generative AI Across Industries

The Future Of Cybersecurity: Advancements In Automated Patch Updates

The Future Of AI Regulation: Balancing Innovation With Privacy And Security

The Evolution Of Web Analytics: From Basic Metrics To Advanced Data Insights

Innovating with GPT Chatbots: The Next Frontier in Web Interaction

Innovative Trends in DDoS Protection Software for Online Platforms

Revolutionizing Digital Customer Interactions with AI Chatbots